After years of experience of working with ROS and robotics, we’ve seen a fair share of weird and surprising bugs and issues. I wanted to share our experiences by writing this article about some of the most common issues we encounter and how we solve and debug them while developing robots with ROS 2.

Many of these issues might be difficult to spot or find a reason for during normal debugging, as they most often do not have any predefined exception messages. Some of them might not produce a crash log at all, and could instead just deadlock the execution or even lead to a collision on a real robot.

This list of issues was written at the time of using Foxy/Galactic ROS 2 distribution, but many of them will still be relevant in newer distributions. The issues are listed in no particular order. Hopefully these tips help both robotics enthusiasts who just have started their robotics journey and also the already existing experts in the field. And maybe some of the known issues will even gain some traction for finding good solutions to them.

Table of Contents

Differing use_sim_time parameter value across Nodes

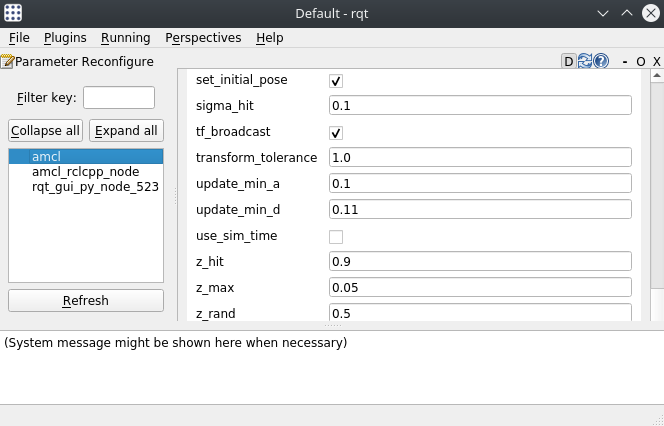

Parameter use_sim_time allows ROS 2 Nodes to use abstracted time which is most commonly published by a simulator, such as Gazebo. We used to have cases during our development where one or two Nodes had this accidentally set to a different value compared to the other Nodes. This causes unexpected behavior in the system, which is often difficult to link with incorrect sim-time value.

In general, when running your Nodes with the simulation, use_sim_time should be set to True, and once you are ready to deploy your code on your real-life robot, it should be set to False. If the parameter is not set at all, it will default to False.

If you’ve set the parameter differently for some of the Nodes, one common thing you might see failing are the transformation trees. When some of the Nodes are running in simulated time and some in real time, the published transformations can have non-matching timestamps, in which case you would start seeing following kind of errors related to extrapolation into the future or past:

Lookup would require extrapolation into the future. Requested time <x> but the latest data is at time <y>, when looking up transform from frame <frame1> to frame <frame2>

This error itself can look confusing, but just simply means that a Node is expecting a transform between two frames to exist close to x timestamp, but it was never published. This can be due to wrongly set use_sim_time parameter, but there are also lots of other potential causes for it, such as incorrect time synchronization between devices or low message rates.

To easily check if all of your Nodes use the correct sim time value, you can use for example rqt’s “Dynamic Reconfigure” feature to view each Node’s parameters and verify that they have the use_sim_time parameter set as expected.

But what would be the best ways to make sure that all the Nodes have the sim-time parameter set correctly? In the very beginning, we used to declare the use_sim_time parameter in our parameter files for each Node individually. And since we use mostly the same launch files and parameter files for both the simulation and the real robot, we had to override the parameter by substituting it with a value we get as a launch argument. This required declaring use_sim_time in every launch file we had and passing it through multiple layers of them, making things quite verbose. Also, it was easy to forget to substitute the parameter for each individual Node declared in launch files.

Later on, we started to use the “SetParameter”-method which sets the sim time globally for the same launch context. This means that any Node or Launch include which is declared after this parameter set, will run under this sim time. This allowed us to get rid of the verbosity of launch argument declarations, and ensure that all the Nodes run with the same use_sim_time value.

import launch_ros

def generate_launch_description(): return LaunchDescription([ launch_ros.actions.SetParameter(name='use_sim_time', value=True) … ])

However, this method isn’t foolproof either. SetParameter needs to be declared in every entrypoint launch file, so if your system has multiple entry points, it needs to be re-declared multiple times. During development and debugging, if single Nodes or Launch files are launched independently, different from normal conditions, it might be very easy to forget to set the parameter correctly. Also, you’ll need to remember to do it for any external Node you might launch, such as Rviz. It is good to note that explicitly declaring use_sim_time parameter for a Node in the parameter file or in the launch file will override the effect of SetParameter.

I’m hoping to someday be able to set use_sim_time as an environment variable for ROS 2, which would further use it for all the Nodes that are run on that device.

Synchronous calls in callbacks

One common way to architecture Node is to implement it as a class and to make it spin with a blocking spin() – function. Currently, with default Node executor settings, it is not possible to call a service synchronously from another callback in this way as it will lead into a deadlock. In my experience, this is one of the most common issues developers bump into, especially when coming from ROS 1 to ROS 2.

A common pattern in our code is that we call multiple other services from another service callback, and we’ll need to wait for their results before returning the main service result. In this case, it doesn’t really matter if you call the service with synchronous call() or asynchronous call_async(), the service response will never arrive anyway due to the executor not spinning while it is executing the callback. And this cannot be even fixed by manually spinning as spinning in a callback would lead into recursive spinning, which isn’t allowed. The problem exists for both rclpy and rclcpp.

The solution is to use MultiThreadedExecutor with either MutuallyExclusiveCallbackGroup or ReentrantCallbackGroup, so that the Node is still spinning while the callback is executing. To find examples and to get a deeper understanding into the topic, you can check out our blog post “Deadlocks in rclpy and how to prevent them with use of callback groups”.

There has been discussion around this topic in many places, and there exists an open issue in rclcpp for it. Hopefully there will be some kind of solution to this eventually.

Invalid odometry data

Over the years we have learned how important valid odometry data is. It plays a key role in default navigation and SLAM solutions in ROS 2, so any weirdness in odometry data will directly affect autonomous driving capabilities.

Some most common reasons for odometry issues are:

- Inaccuracy (we recommend using motor encoders!)

- Relying on one odometry source – use sensor fusion, since almost all of the odometry sources have their weaknesses; wheels slip, IMU drifts, etc.

- Timestamps – try to stamp the odometry messages as close to measurement as possible

- Delays in the system – Is the data still fresh when it is received by the Node that uses it?

- Twist velocities in the odometry message being calculated incorrectly or reported in a wrong frame

We had an example some time ago related to the last point, when we integrated Boston Dynamics Spot to use nav2. It walked autonomously very well into one direction, but to others it walked as if it had taken a couple glasses of something stronger. It walked backwards, sideways, very slowly or doing criss cross movements with its legs, without following the given path. After multiple long debugging sessions, we ended up finding out that the odometry velocities in the ROS 2 spot_driver are reported in the wrong child frame. Since then, this has been already fixed for the ROS 1 version of the driver.

Freedom Robotics has published an amazing article describing tips on how to check your odometry on wheeled robots. You should definitely go through it before jumping to use nav2, amcl or slam-toolbox. The “overlapping lidar scans” debugging trick has especially proven out to be very useful and has helped us to fix many issues.

DDS issues

Sometimes it feels like no matter how much you debug your code, you don’t seem to find any reasonable explanation for the weird behavior. If you encounter this kind of issue related to message delivery (topics, services, actions), you might want to check if you get the expected result with a different DDS middleware.

Instruction on how to change the DDS implementation can be found from the official ROS 2 documentation. If your problem still persists with another middleware, the issue is most likely then somewhere else.

One of my personal favorite DDS issues from ROS 2 Foxy is an issue related to creating service clients, which is reproducible only when using Fast-RTPS. If a service client is re-created exactly 22 times without it being destroyed in between, the whole service will get deadlocked. To this day, I’m still wondering where this magic number 22 comes from.

Luckily, there have been less and less issues with DDS middleware with the newer ROS 2 releases and DDS versions.

Remnant Nodes running in the background

I remember multiple times when I’ve spent quite a bit of time pulling my hair out while trying to understand what is wrong with my code, while the real reason has been some remnant Nodes running in the background.

There have been cases where the exact same Node has been running accidentally twice, which is why some action calls have behaved incorrectly. We have also had cases where we’ve had either the localization Node running twice or localization and mapping Nodes running simultaneously, causing map-to-odom transformation being published from two different sources. It can take a while to figure out why the robot is seemingly jumping back and forth on the map.

Some possible reasons for these sneaky background-running Nodes are:

- Developer made an error during launch file editing that led into a crash during bringup. In some cases, this might leave some launched Nodes running in the background.

- Node was already running through another launch file or in another terminal (with ROS, we are probably all used to having 10 terminals open simultaneously)

- You have some neat custom-logic to start your Nodes from the code, with for example using Python’s

subprocess.Popen, but the process is not stopped correctly when expected.

In these cases, ros2 node list and htop commands are your best friend, as they can display all the currently running Nodes and processes.

Parameter changes in config files are not applied without rebuilding: ament_python

So, you have a ROS 2 package that is created using Python and to avoid rebuilding after each of your changes, you’ve used –symlink-install tag. You’ve modified some of your Node parameters in your configuration file, but you realize that, for some reason, none of these changes take effect.

There is a known issue in colcon-core that configuration files are not symlinked correctly for Python packages. The only way is to rebuild your package for the parameter changes to take effect. The issue doesn’t exist when the package is built with CMake, so to overcome this, all of our internal packages are built this way, with Python support added on top. The Robotics Back-End has published a great article on how to do this.

Build related easy-to-forget steps

Lastly, I’ll list here some of the common build-related mistakes that most of the new developers find out about the hard way, and even the most seasoned ROS developers still do from time to time:

Rebuild

Did you remember to colcon build your package after the new changes? Especially when coming from Python, it might be easy to forget to do so. This needs to be done for example after adding new launch files, Nodes, message interfaces or configuration files.

Make sure you also build in the correct location, in your workspace root. Very often, I used to make a mistake by going into the src -folder and building there. The build will finish successfully, but the changes won’t take effect.

Source

After building, you will need to remember to source your changes, otherwise they won’t take effect.

Shebang line for Python Nodes

This is easy to forget to have from the beginning of your rclpy Node files. All the python Nodes should start with

#!/usr/bin/env python3

Otherwise, you’ll encounter an error which might not be easy to figure out:

OSError: [Errno 8] Exec format error:

Make Python Nodes executable

If you are using CMakeLists to declare your Python Nodes, you will need to make the Node files executable. On Ubuntu, this can be done with

chmod +x <script_name.py>

Failing to do so will result in following exception

launch.substitutions.substitution_failure.SubstitutionFailure: executable <node_name> not found on the libexec directory

Final words

Hopefully you will find some of these tips useful for your future development sessions. Happy debugging!