Since the last blog post, we have taken the first steps towards self-driving. We would like our robot to use its sensors to observe the world and make decisions based on the data, but also use prebuilt maps of the buildings to further assist with autonomous navigation. For example on construction sites, there are usually 3D BIM models of the building available, which can be given for our robot as the map. In this post, I will give a quick overview of how we took first steps towards navigation, localization and self-driving using ROS.

Preparing the robot and simulation

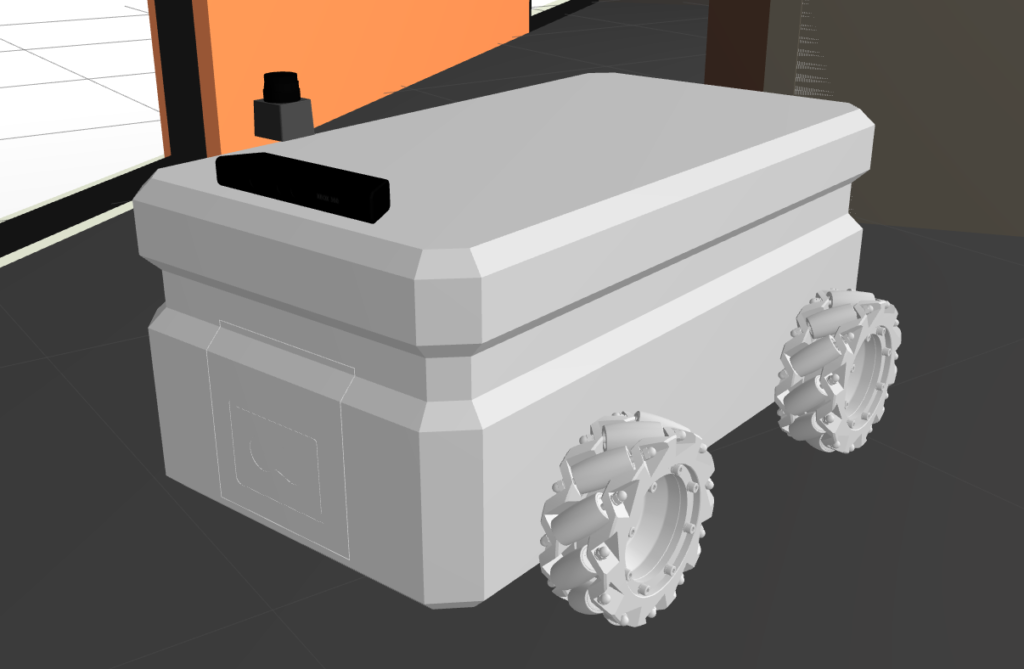

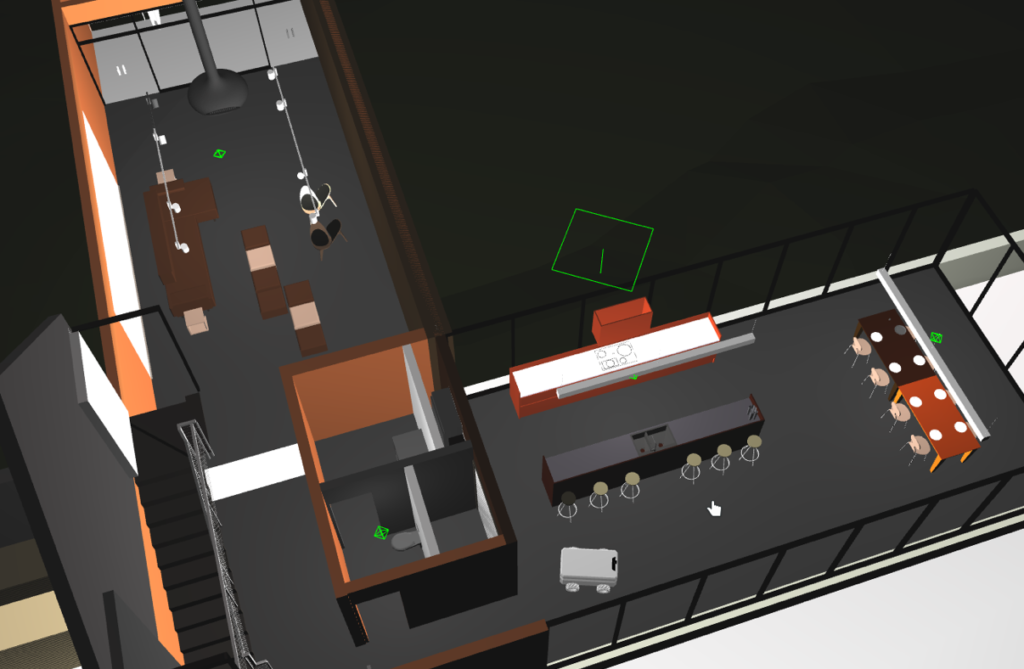

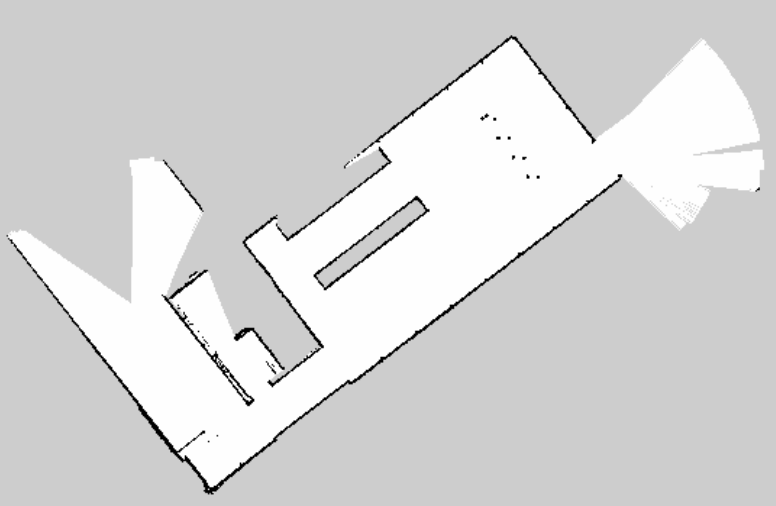

First of all, we needed to prepare our robot and environment for these tasks. Our simulated robot was attached with Kinect camera and Hokuyo laser sensor based on these instructions. A simple BIM (Building information model) was downloaded from Revit samples (rac_basic_sample_project.rvt) and converted first using Revit to export it in .ifc format and then Blender to convert to .dae format with IfcBlender add-on.

The conversion is not perfect as some of the textures are not preserved and additional lights needed to be added to maintain reasonable lighting level, but overall the model is suitable for our needs. The original building was further modified so that we only operate on the first floor, which includes the kitchen and living room. To get to the living room the robot would need to descend small stairs, so we will mostly drive in the kitchen.

Our robot with the sensors attached and the environment

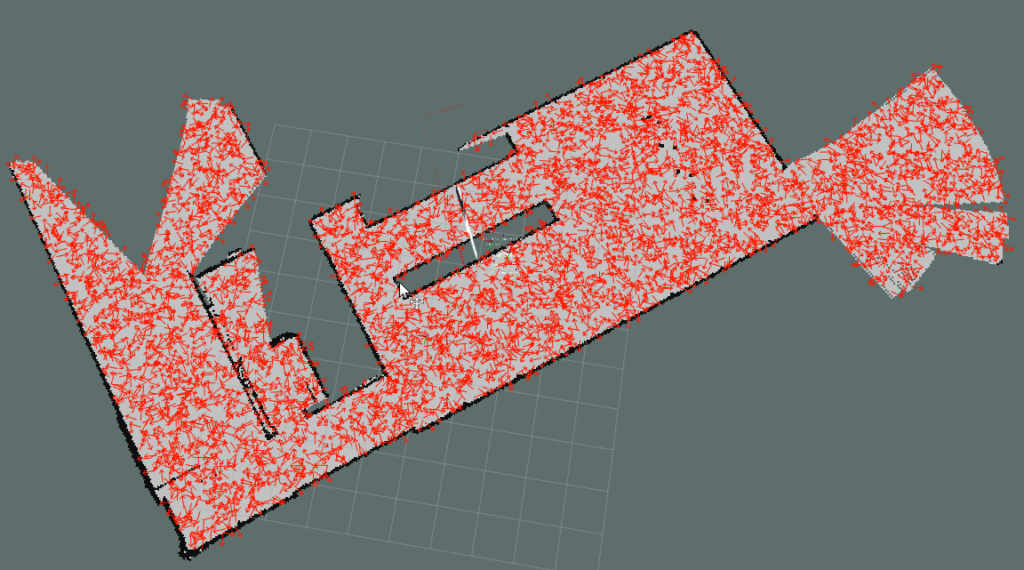

Creating in-door map

One of our open questions is how to create 2D map from the BIM in the format that can be compared directly with the sensor data for robot localization? One easy solution is to simply drive the robot around in the simulation and build a map based on collected data from laser sensor and odometry. This is exactly what SLAM (Simultaneous Localization and Mapping) ROS package gmapping can do for us. Using gmapping, I drove the robot around the kitchen area and obtained the map in .yaml format.

2D map from the kitchen area

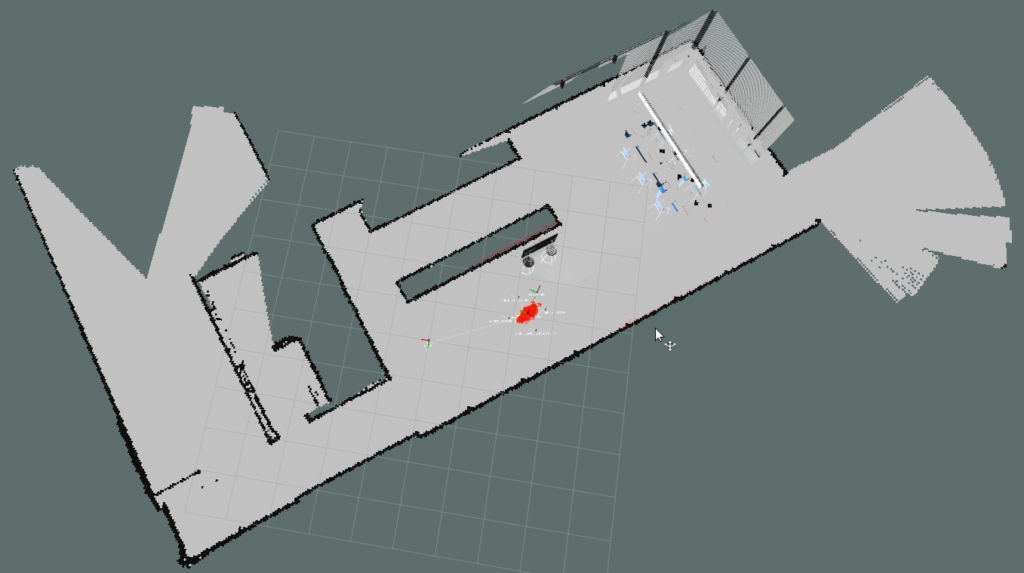

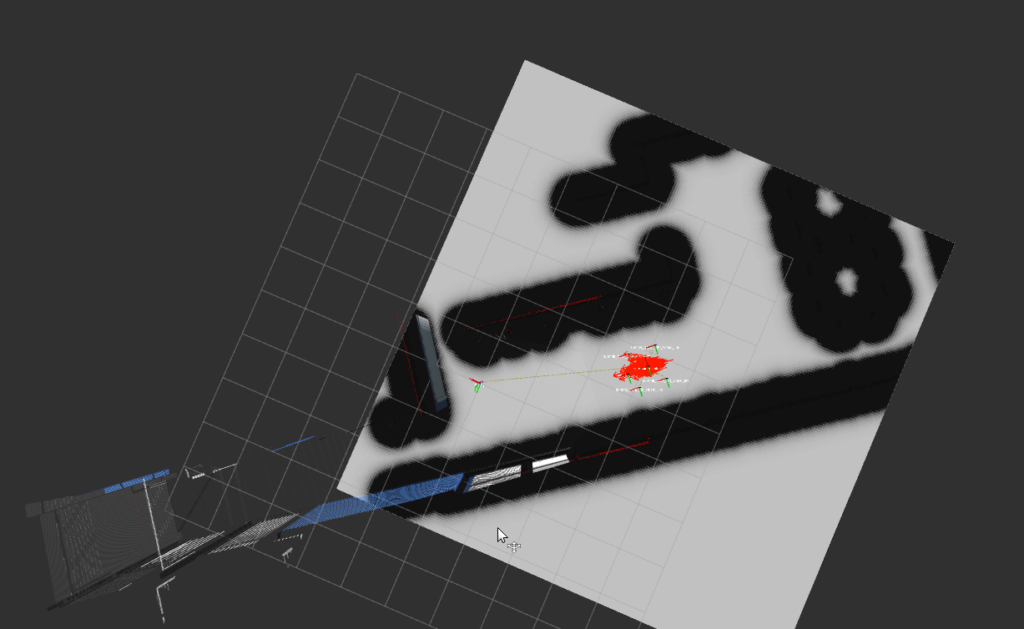

As now we have the 2D map from the environment, our robot can use it directly without needing to explore the building by itself. Before moving on to navigation tasks we will still need to do one thing; the localization. Again, ROS provides a ready package for this, called amcl. It uses Adaptive Monte Carlo Localization algorithm to localize the robot based on given map and sensor data. When initialized randomly, it spreads all possible robot locations (red arrows in image) around the known map. Gathering more information from the environment with sensors allows the algorithm to eliminate some of the locations, leading to more and more precise location of the robot.

Amcl location predictions (red arrows) in initialized state and after driving around a short distance.

Video 1. Localization

First steps in self driving

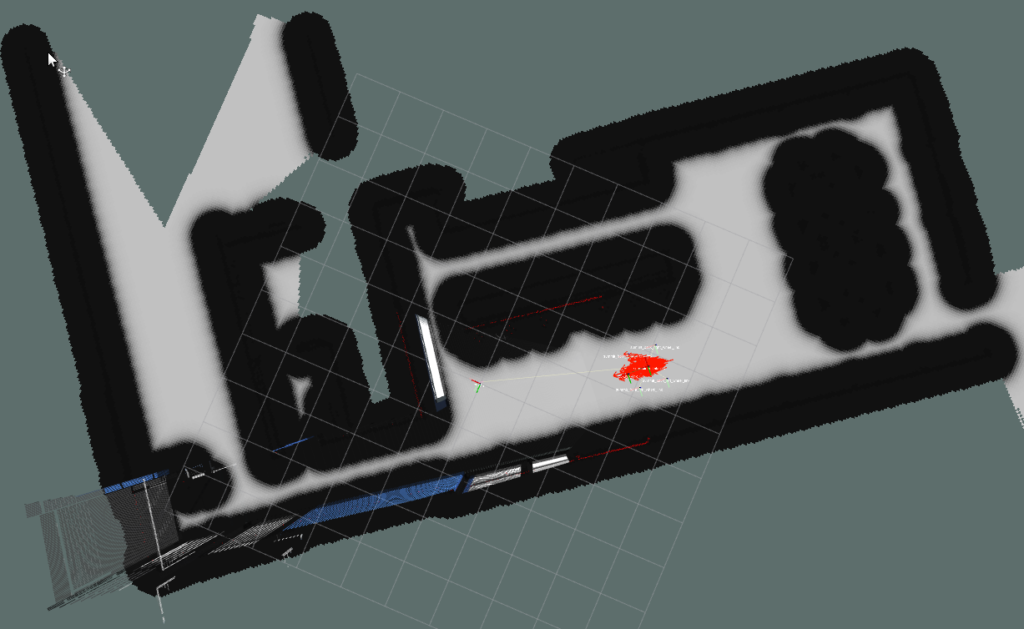

With the correct localization, we could then move on to the navigation tasks. Move_base ROS package is a library for path planning and collision-free navigation. Essentially it creates two maps from the environment: local costmap and global costmap. Global costmap is built based on the original map and shows all the locations which can be safely accessed with the robot. Local costmap then uses sensor readings to check the real status of the environment so that if there is for example a new unknown object blocking the robot’s way, it would show up in local costmap.

In real life the map taken from the BIM Model of the building will differ from real surroundings. In reality there might be no wall yet or a pile of bricks, and our robot should adjust to reality but still be able to localize itself. Together, these two maps are used to plan a safe path for the robot to the given goal location. When the path is ready, the package controls the robot to stay on the planned path and navigate to the goal coordinates.

Global and local costmaps

You can see a simple example using navigation package in video 2. The robot is given a goal and it carefully navigates to it without colliding any walls or objects. All of the previously mentioned packages contain parameters that still need to be tuned to get better performance on localization and navigation. Some problems still persist due to the strafing mode, such as localization goes crazy when strafing (video 3) and the world physics are not properly defined allowing the robot to defy gravity (video 4). These are most likely caused by how the movement of mecanum wheels is defined with Planar Move Plugin.

Next, we’ll continue on working with moving object collision avoidance, probably with some reinforcement learning approaches. Stay tuned!

Video 2. Path planning

Video 3. Strafing lokalization

Video 4. Gravity problem