The XploreR project was the result of eight weeks of work in cooperation with the Karelics company for the “Robotics and XR” course offered by the University of Eastern Finland and lectured by Professor Ilkka Jormanainen. This blog post is aimed to give an overview of the project.

The goal of the XploreR project was to apply autonomous exploration using a single robot. In the next step, we combined the autonomous exploration algorithm implemented in a mobile robot with a virtual reality environment for visualizing collected data. On the physical side, the SAMPO2 robot was outfitted with the Karelics Brain software and all the necessary hardware. The SLAM algorithm was already implemented and ready for use. Finally, the robot mapped the environment, while it moved around autonomously according to the goal poses set by the exploration algorithm. The goal was to be able to view the robot sensor data and the 2D map generated by the SLAM algorithm on the digital side, i.e., the Unity scene.

The project’s potential use cases include exploring unknown environments with the robot while a human user remotely monitors and possibly controls the robot and its navigation through the unknown. Natural disaster areas, for example, where potential victims are found and humans cannot traverse, are an example of an unknown environment where such an approach could be useful. Another example is space exploration, in which the robot explores new planets or astral bodies.

Overview

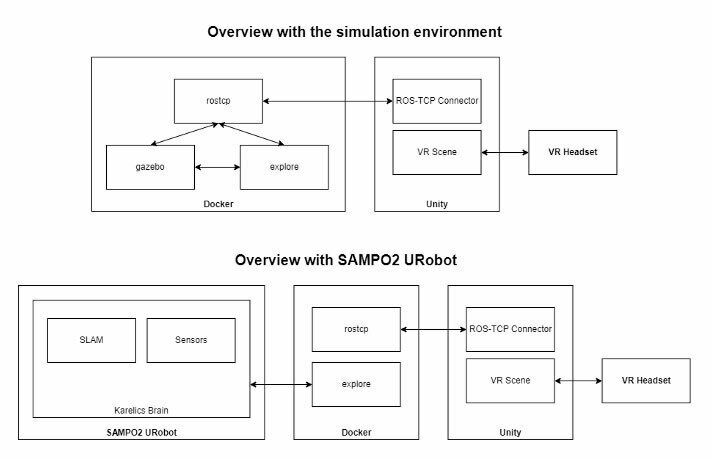

The project can be separated into two main environments: simulation and real robot. Figure 1 illustrates the different environments, with the real robot being specifically defined as the SAMPO2 URobot for our project.

The simulation environment includes the following Docker containers:

- rostcp: handles communication with Unity

- gazebo: includes Turtlebot3 Gazebo simulation and Rviz instance

- explore: includes the autonomous exploration algorithm

For use with a real robot, the gazebo container is not necessary and should be disabled to avoid data overlap. In both use cases, the Unity scene is fitted with the ROS-TCP Connector plugin to communicate with ROS2 and a VR Scene for visualization of the data with a VR Headset.

Robot Navigation

Robots navigate in a similar fashion to humans. To move from one point to another:

- The robot needs to know the place (Mapping)

- Then it needs to know where it is in the environment (Localization)

- Next, the robot needs to plan how to move between two points (Path Planning)

- Finally, it needs to send messages to the wheels to make the robot follow the path while avoiding obstacles (Robot Control and Obstacle Avoidance)

In robotics, maps are mainly acquired to enable autonomous navigation. However, mapping using robots can also be useful when it comes to exploring and mapping dangerous environments or places that are inaccessible to human beings. Hence, autonomous exploration strategies were developed, which enabled the robot to find the next location to explore to improve its map and localization estimates. The integration of a SLAM algorithm, an exploration strategy and a path planning/navigation algorithm allows the robot to autonomously explore and map an unknown environment using its sensor data.

Autonomous exploration

Exploration strategies place an emphasis on autonomously mapping as much of an unknown environment as possible, and in the shortest time possible.

Occupancy Grid Map is a map that is segmented into cells, where each cell is assigned with a probability of occupancy:

- probability of zero = free from obstacles = OPEN

- probability of one = OCCUPIED

- probability of 0.5 = no information about the occupancy of the cell/not yet explored = UNKNOWN

a popular and simple exploration strategy is the Nearest Frontier approach. This algorithm simply analyzes an Occupancy Grid Map and detects all potential borders (called candidate frontiers) between the cells marked as OPEN and UNKNOWN. The distance between the current robot poses and each frontier is analyzed, and the frontier closest to the robot is chosen as the next location to explore. In this project, the Nearest-Frontier approach was implemented using code from the m-explore-ros2 repository, a port of the popular explore-lite ROS package.

Repository and Videos

More details about our project can be found in our GitHub repository: git repository.

You can watch a short video describing the XploreR Project here: XploreR video.

A teaser video is also available at the following link: XploreR Teaser.

About us

The group members of the project are Artemis Georgopoulou, Fabiano Junior Maia Manschein, Shani Israelov and Yasmina Feriel Djelil. We are master’s students in the Erasmus Mundus Japan program, Master of Science in Imaging and Light in Extended Reality (IMLEX). This programme brings together image conversion, lighting and computer science and is implemented by a consortium of four universities: the University of Eastern Finland, Toyohashi University of Technology (Japan), University Jean Monnet Saint-Etienne (France) and KU Leuven (Belgium).

The choice of this project subject was linked to our interest in SLAM and XR-related subjects. As such, we decided to enhance autonomous navigation with VR visualization and interaction.

As all the group members came from diverse backgrounds, dividing the tasks and making sure that all the members have a good understanding of the project content was quite challenging. Thankfully, this could be solved with the help of our mentors at Karelics. As most of the technologies used in this project were new to us, they helped us in understanding both the concepts and how the distinct parts in the robot and ROS side worked.

We would like to sincerely express our appreciation to Karelics for the support they’ve sent our way while working on this project.